LLM in a box template

Motivation

The proliferation of Large Language Models (LLMs) has created significant opportunities for innovation across all research domains. However, accessing these powerful tools effectively besides the consumer oriented flagship products (chatgpt, claude workspace) presents a substantial challenge. Researchers are often faced with a choice between relying on proprietary, cloud-based APIs—which can introduce concerns regarding cost, data privacy, and scientific reproducibility — or undertaking the complex engineering task of self-hosting open-source models. This reliance on a few dominant providers creates new, powerful gatekeeper functions; whoever controls the LLM interface can dictate which tools a user sees, what responses are surfaced, and even whether a third-party tool can be installed in the first place. The technical barrier to the alternative, self-hosting, often requires dedicated support from Research Software Engineers (RSEs), a role that is crucial but not always available to every research group.

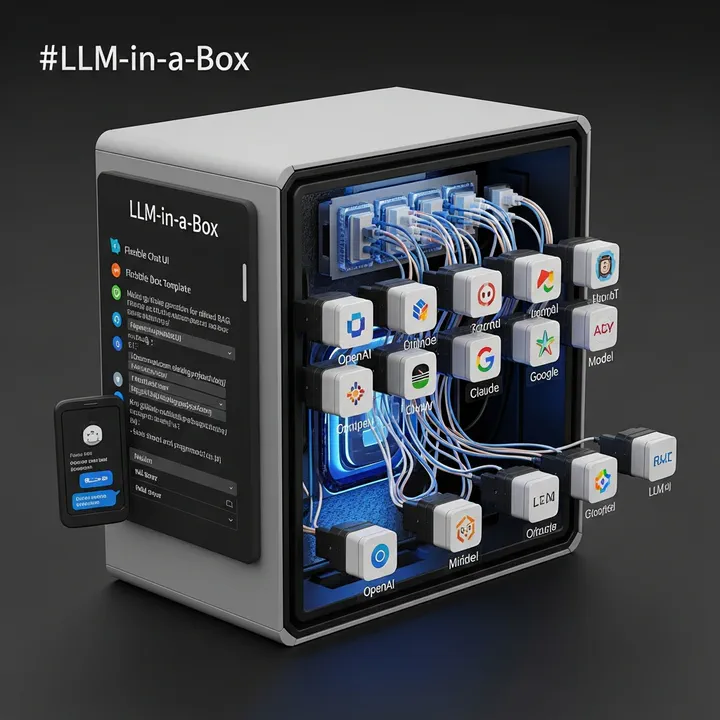

LLM-in-a-Box is a templated project designed to democratize access to generative AI for the research community. It provides a cohesive, containerized stack of open-source tools that can be deployed with minimal configuration, effectively packaging the expertise of an RSE into a reusable template.

Template description

This template provides an easy-to-deploy, self-hostable stack to make the generative AI ecosystem more approachable for research and education. It unifies access to both commercial and local models (via Ollama) through a flexible chat UI and a single API endpoint, enabling private, reproducible, and sovereign AI workflows.

This template project contains:

- A flexible Chat UI OpenWebUI

- Document extraction for refined RAG via docling

- A model router litellm

- A model server ollama and also VLLM

- State is stored in Postgres https://www.postgresql.org/

This template is built with cruft so it is easy to update. Furthermore secrets are managed with sops and age. We use traefik as a reverse proxy.

Get it here

See github.com/complexity-science-hub/llm-in-a-box-template to obtain the template and further instructions for creating an instance.